No one enjoys prior authorizations.

Not patients.

Not providers.

Definitely not admin teams.

You chase down codes.

Wait on faxes.

Call payers for the third time this week.

All just to approve something that should have been automatic in the first place.

So yeah, bringing in AI to speed things up makes perfect sense.

AI can sort the data.

Autofill the forms.

Flag missing info.

Submit the request while you move on.

But here’s the catch:

Who’s responsible when the AI gets it wrong?

What if it flags the wrong code?

Or delays care?

Or denies it altogether?

AI can solve a huge admin headache. It can also create ethical minefields if you’re not paying attention.

This isn’t about whether you can use AI for prior auth.

It’s about how to use it without losing control, harming patients, or breaking trust.

Why Prior Authorizations Are a Critical Pain Point

If there’s one process almost everyone in healthcare agrees is broken, it’s prior authorizations.

They were supposed to reduce unnecessary care. In reality? They create bottlenecks that hurt everyone, especially the teams stuck managing them behind the scenes.

What PAs Are and Why They Exist

Prior authorizations (PAs) are meant to control costs and ensure treatments are medically necessary before a payer agrees to cover them.

The logic isn’t flawed. The execution is.

Instead of a streamlined digital process, most prior auths still rely on:

Manual data entry

Faxes and phone calls

Long wait times with inconsistent feedback

According to the American Medical Association (AMA), 93% of physicians report care delays due to prior authorizations, and 82% say it can lead to treatment abandonment.

This isn’t just inefficient. It’s dangerous.

The Operational Burden for Admin Teams

Prior auths are eating your team’s week alive.

According to the same AMA report, healthcare staff spend an average of 13 hours per week per physician just managing prior auth requests.

Let that sink in.

That’s nearly two full workdays gone.

Not on patient care. Not on strategy. On just getting approvals.

What does that look like for admin teams?

Logging into payer portals

Chasing down missing clinical documentation

Manually filling out forms

Re-entering the same patient info across disconnected systems

Following up when the response doesn’t come back

It’s not just frustrating. It’s unsustainable.

Which is why AI is entering the picture. But speed can’t come at the cost of ethical oversight.

How AI Can Support Prior Authorization Workflows

AI isn’t here to replace your prior auth team. It’s here to keep them from drowning in busywork.

When used correctly, AI can accelerate authorizations, reduce delays, and free up your staff to focus on exceptions, not endless form-filling.

Here’s how it works in practice.

Automating Repetitive Tasks

Prior authorization involves the same frustrating steps again and again. AI assistants step in to handle them, without changing your core systems.

What that looks like:

Extracting patient data from the EHR or intake forms

Auto-filling prior auth request forms based on payer-specific templates

Attaching relevant documentation (like notes, labs, or imaging) based on diagnosis codes

Submitting the request through payer portals or third-party systems

Tools like Magical let teams automate these micro-tasks directly in their browser.

No integrations, no IT bottlenecks. Just time saved, instantly.

Smart Decision Support

AI can also help you decide if a prior auth is even needed in the first place.

By cross-referencing procedure codes with payer policies, some systems flag:

Whether prior auth is required

What documentation will be expected

If a previous approval already exists

Instead of guessing or digging through PDFs, your team gets real-time intelligence.

This isn’t just convenient. It prevents wasted time on unnecessary submissions and gets patients treated faster.

Reducing Turnaround Time

Delays in prior auth = delays in care = frustrated patients and providers.

AI cuts that lag time by:

Submitting complete, clean requests up front (fewer rejections)

Avoiding manual rework due to missing data

Tracking status updates automatically so you don’t miss a response

Some organizations using AI for prior auth have reported turnaround time reductions of 30–50%.

That’s not theoretical. That’s operational relief your team can feel.

AI can speed things up. But without the right guardrails, it can also raise red flags. That’s why the next section matters most.

Ethical Considerations When Using AI for Prior Authorizations

Faster isn’t always better, not if it means losing control over decisions that impact patient care.

Prior authorizations are already a high-stakes part of the healthcare system. Add automation without clear boundaries, and you risk creating a faster version of the same broken process.

AI needs to accelerate the right things without introducing new forms of bias, error, or confusion.

Here’s what admin teams, compliance leads, and ops leaders should keep in mind.

Fairness and Equity

If your AI system was trained on flawed or biased data, it can replicate (or even amplify) existing inequities in healthcare access.

What does that look like?

Certain patient groups getting flagged more frequently

Certain providers getting disproportionately denied

Automated “rules” that don’t reflect clinical nuance

According to a study in Health Affairs, algorithmic bias in healthcare can lead to unequal treatment recommendations, especially for historically underserved populations.

Fixing this starts with:

Auditing your AI models regularly

Using diverse and representative training data

Ensuring humans still review edge cases or sensitive flags

Transparency and Explainability

If the AI denies a prior auth request, your team should be able to explain why, both to providers and to patients.

That’s a big ask with many off-the-shelf tools. Too many operate like black boxes: they surface results but don’t show their logic.

To maintain trust and stay compliant, your AI system should:

Offer clear reasoning behind its suggestions or rejections

Log every step it takes

Allow staff to trace decision paths in real time

If your admin team doesn’t understand how the AI works, it’s not a tool. It’s a liability.

Accountability

AI can help make decisions, but it shouldn’t be the final decision-maker.

Why? Because your organization is still responsible when things go wrong:

A prior auth gets denied due to a missing attachment the AI didn’t catch

A time-sensitive treatment is delayed because the system flagged it incorrectly

A payer requests documentation that your system didn’t submit

Accountability in AI workflows means:

Clear human-in-the-loop checkpoints

Protocols for overrides and escalations

Continuous monitoring for edge cases or anomalies

Admins aren’t off the hook, and they shouldn’t want to be.

Patient Privacy and Data Security

AI tools working on prior authorizations interact with sensitive health data, including PHI, payer details, and clinical documentation.

This isn’t optional: Your AI solution must meet HIPAA and HITRUST standards at a minimum.

It should also:

Avoid storing PHI unnecessarily

Be fully auditable

Be browser-secure (especially if your team is remote or distributed)

Magical is designed with these standards in mind. It works inside your browser, doesn’t store sensitive data, and keeps your workflows secure while enhancing speed and accuracy.

Guiding Principles for Ethical AI Use in Prior Auth

AI can make prior authorization easier. But if it’s not built and used with guardrails, it can also make things worse faster.

The good news? You don’t need to be an ethicist to get this right.

You just need a clear framework to guide how AI gets introduced, monitored, and scaled across your team.

Here’s what ethical AI use actually looks like in the real world of healthcare admin.

Human-in-the-Loop Design

AI should support decisions, not make them in isolation.

In a human-in-the-loop model:

Admins review AI-suggested claims before submission

Staff can override or adjust any field flagged by the system

Exception cases are routed for manual review, not auto-denial

This keeps your team accountable and empowered. The AI moves fast, but humans stay in control.

Transparency in AI Outputs

Your team shouldn’t have to guess why the AI is doing what it’s doing.

Whether it’s recommending a procedure code, selecting documentation, or pre-filling payer information, the reasoning should be visible and understandable.

Key features to look for:

Clear audit trails

Annotations or tooltips that explain logic

Simple language, not machine jargon

If your AI tool can’t explain itself to an admin assistant in under 10 seconds, it’s too opaque.

Bias Auditing and Model Monitoring

Even if your AI works great out of the box, it needs ongoing checks to ensure fairness.

Best practices include:

Regular audits of prior auth outcomes by patient population, provider group, or diagnosis type

Retraining or adjusting models as payer rules or coding standards evolve

Flagging unusual decision patterns, like a spike in denials for a specific region or specialty

Bias in healthcare doesn’t always look obvious. Your AI needs to help spot it, not scale it.

Consent and Communication

If AI is playing a role in prior authorization, your team should be ready to communicate that clearly, both internally and externally.

That might include:

Informing providers that their prior auth workflows include AI support

Explaining how flagged decisions are handled

Ensuring patients have a path to escalate concerns tied to delays or denials

It’s not about creating fear. It’s about building trust.

When people know automation is there to support them, not sidestep them, adoption gets easier and outcomes get better.

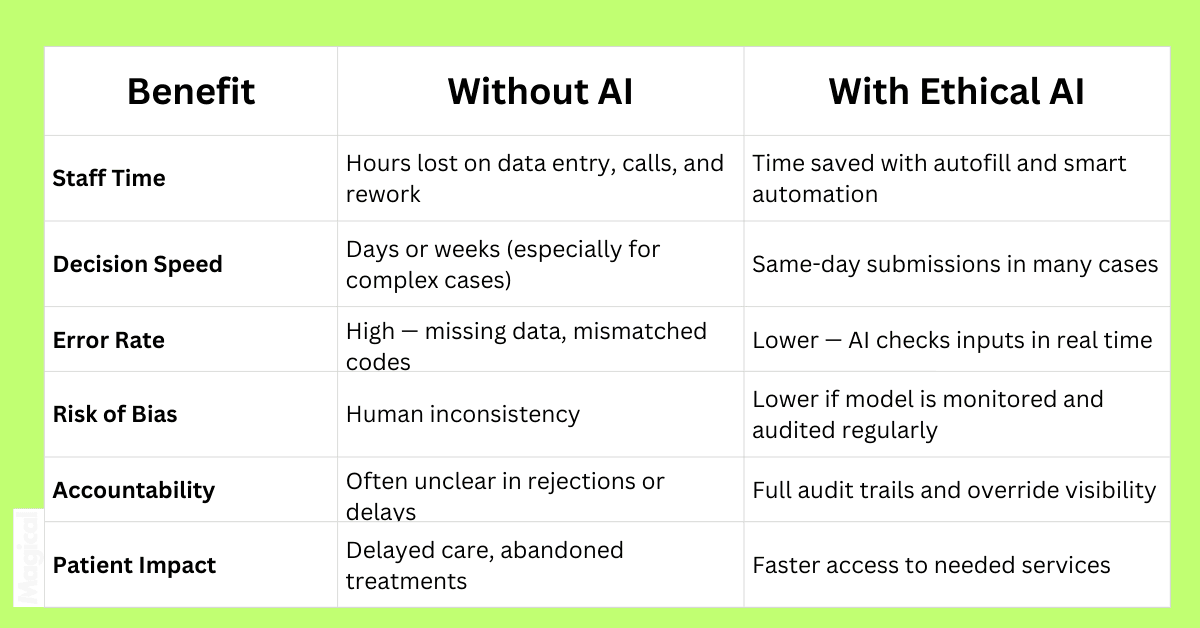

Benefits of Ethical AI in Prior Auth Workflows

AI that’s fast but reckless is a liability.

AI that’s fast, smart, and responsible? That’s a workflow upgrade.

Here’s how ethical AI transforms prior authorization operations, from chaos control to actual clarity.

When AI works with your team (not around them), the results aren’t just faster. They’re better.

You don’t lose control. You gain visibility.

You don’t automate blindly. You automate responsibly.

Questions Healthcare Admin Teams Should Ask Before Adopting AI for PAs

Before you bring AI into your prior authorization process, ask better questions than: "Will it make us faster?"

Speed is great, but without the right guardrails, you’ll end up automating the very problems you were trying to fix.

Here’s what smart healthcare admin teams are asking before they roll out AI for prior auth:

1. What data is the AI using, and where is it coming from?

If the system is trained on biased, outdated, or incomplete data, it will make bad decisions. Full stop.

Make sure your AI tool uses verified, healthcare-specific datasets and doesn’t pull from random or generic models.

2. Does the tool support a human-in-the-loop workflow?

If AI is submitting, flagging, or denying anything without human oversight, that’s a red flag.

Ask whether your team has override access, visibility into suggestions, and the ability to adjust decisions.

3. How are errors tracked and corrected?

Mistakes happen even with AI. Find out how the tool handles:

Incorrect form submissions

Mismatched diagnosis codes

Missing documentation

And ask: Will the system learn from corrections?

4. Does this solution comply with HIPAA and other healthcare regulations?

This isn’t optional. Your AI platform must:

Be secure

Avoid storing PHI unnecessarily

Offer full audit logs

Be transparent about how it handles sensitive data

Bonus points if it’s browser-based and doesn't touch your infrastructure, like Magical.

5. How are patients informed, if at all?

If the tool plays a role in prior auth outcomes, your team should be ready to explain how it works to patients or clinicians.

Transparency builds trust. Trust keeps patients from abandoning care when things get delayed.

AI should make things easier, not raise new questions every time it runs.

Ask the hard stuff now, and you won’t have to answer even harder stuff later.

Final Thoughts: Doing Prior Auth Right With Ethics and AI

The prior auth process is broken. You already know that.

What matters now is how you fix it, without creating new risks, blind spots, or trust gaps along the way.

AI is the tool.

But ethics is the system that makes the tool sustainable.

When you combine automation with accountability, transparency, and a human-first mindset, you don’t just speed up authorizations, you

Reduce denials

Protect patients

And empower your admin team to do their best work

Don’t just plug in another “smart” system and hope it saves time.

Build something better. On purpose.

Want to Automate Prior Auth Work Responsibly?

Download the free Magical Chrome extension or book a demo for your team today!

Magical is trusted by 100,000+ companies and nearly 1,000,000 people to save 7 hours per week, automating admin work without storing PHI or disrupting your existing systems.